What do Einstein and AI have in common?

When Albert Einstein developed his theory of relativity, he didn’t do it in isolation. He stood on the shoulders of giants. He studied Maxwell to understand light, turned to Lorentz for insights into motion and contraction, and leaned on Poincaré to grasp the principle of relativity. Without their work, his theory wouldn’t exist. Zero. Nothing.

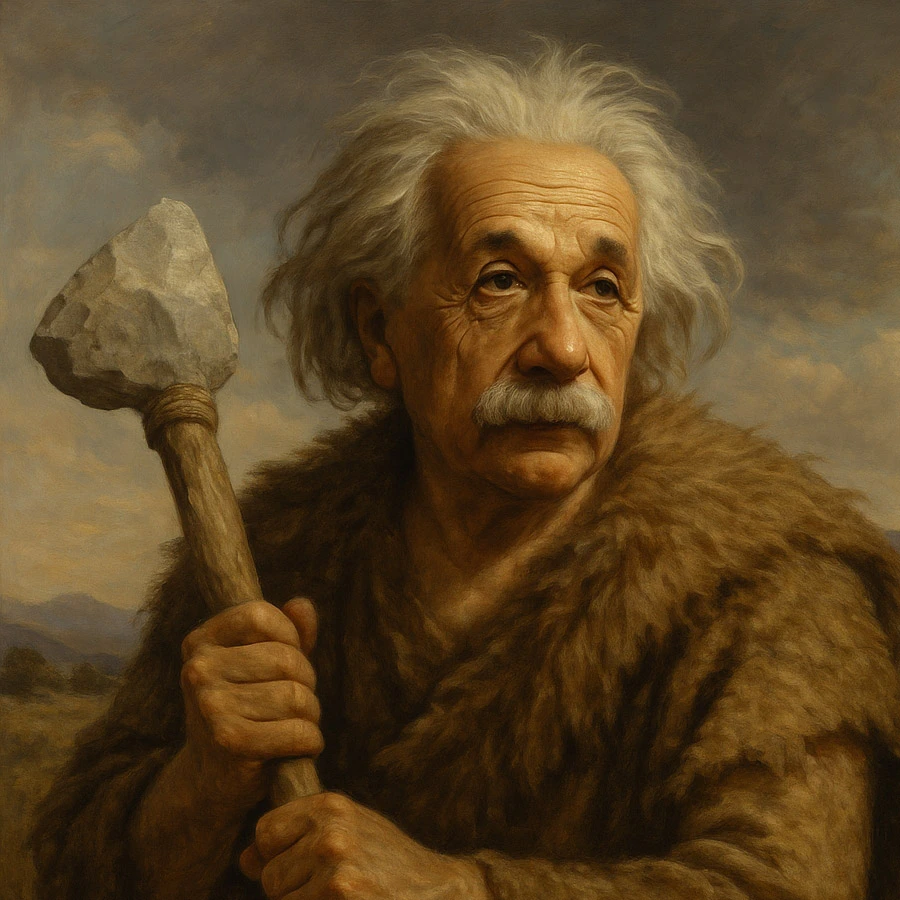

Now imagine someone told him: “Sorry, copyright rules. You need permission first.” Want to read Poincaré? Fill out a form. Wait for approval. No learning. And just like that — back to the Stone Age.

AI is our new Einstein

Today, artificial intelligence is in the same position Einstein once was. It’s learning. And to learn, it needs to read — a lot. Books, articles, music, paintings — everything. That’s its education. Its textbooks. Its Poincaré and Maxwell.

The problem? All of that content is owned by someone. Which means: copyrights. And that means: you need to ask permission. From everyone. Literally, everyone.

Nick Clegg: “It’s just not realistic”

Recently, Nick Clegg, former top exec at Meta, said out loud what everyone in tech is quietly thinking:

“I just don’t see how you can approach everyone and ask for permission. It’s not workable. And if you try to do that in the UK, while no one else does, you’ll kill the AI industry in this country overnight.”

His solution? A sort of opt-out system. Don’t want AI using your work? Say so. But this doesn’t solve the core issue — how can AI learn without breaking the rules?

We’re at a fork in the road

This is a historic crossroads. On one side, we have millions of artists and creators who see their work not as data, but as part of their soul. On the other — tech companies trying to train AI to understand and think.

If we lock it all behind red tape, AI will stay like a tribesman with an iPad: powerful tool, no clue how to use it. But if we throw the gates wide open, we risk devaluing the work of real people — and our culture along with it.

Why does this matter?

For writers and artists — it’s a fight for fairness. For AI developers — it’s about survival. And for the rest of us? This is the moment we decide whether AI becomes a tool for humanity — or just another corporate weapon locked behind paywalls.

If access to knowledge becomes a privilege for the wealthy, we won’t get an AI that uplifts us. We’ll get one that polices us.

It’s ironic: to build something truly intelligent and useful, AI has to go through the same process we do — reading, mimicking, reflecting. As menscult.net noted, even Einstein was a kind of “plagiarist” — in the best sense of the word.

So what’s next?

This isn’t just a legal debate. It’s a question of our future. Either we create a world where knowledge is accessible, or we hand Einstein a stone axe and wonder why he’s not inventing anything.